My friend John W. Scott makes a very perceptive takedown of a bad-science story from a few months ago. In 2015, a list of two-dozen international news sites report that leaving beds unmade helps fight dust mites; all refer back to a speculative BBC article from 2005; no confirmation or testing was ever made on the original speculation. Read more here:

2015-12-28

2015-12-21

Short Response to Flipping Classrooms

A short, possible response to the proponents of "flipping classrooms" (as though it were really a new or novel technique): Presumably we agree that students must do some kind of work outside the classroom. Then as the instructor, we might ask ourselves if we are more fulfilled by: (a) leading classroom discussions about the fundamental concepts of the discipline, or (b) serving as technicians to debug work on particular applications of those principles.

Personally, in my classes I do manage to include both aspects, but the time emphasis is more heavily on the former. If forced to pick either one or the other, then I would surely pick (a).

Personally, in my classes I do manage to include both aspects, but the time emphasis is more heavily on the former. If forced to pick either one or the other, then I would surely pick (a).

2015-12-14

Why m for Slope?

Question: Why do we use m for the slope of a line?

Personally, I always assumed that we use m because it's the numerical multiplier on the independent variable in the slope-intercept form equation y = mx +b.

Michael Sullivan's College Algebra (8th Ed., Sec. 2.3), says this as part of Exercise #133:

But others disagree. Wolfram MathWorld says the following, along with citations of particular early usages (http://mathworld.wolfram.com/Slope.html):

Personally, I always assumed that we use m because it's the numerical multiplier on the independent variable in the slope-intercept form equation y = mx +b.

Michael Sullivan's College Algebra (8th Ed., Sec. 2.3), says this as part of Exercise #133:

The accepted symbol used to denote the slope of a line is the letter m. Investigate the origin of this symbolism. Begin by consulting a French dictionary and looking up the French word monter. Write a brief essay on your findings.Of course, "monter" is a French verb which means "to climb" or "to go up".

But others disagree. Wolfram MathWorld says the following, along with citations of particular early usages (http://mathworld.wolfram.com/Slope.html):

J. Miller has undertaken a detailed study of the origin of the symbol m to denote slope. The consensus seems to be that it is not known why the letter m was chosen. One high school algebra textbook says the reason for m is unknown, but remarks that it is interesting that the French word for "to climb" is "monter." However, there is no evidence to make any such connection. In fact, Descartes, who was French, did not use m (Miller). Eves (1972) suggests "it just happened."The Math Forum at Drexel discusses this more, including a quote from J. Miller himself (http://mathforum.org/dr.math/faq/faq.terms.html):

It is not known why the letter m was chosen for slope; the choice may have been arbitrary. John Conway has suggested m could stand for "modulus of slope." One high school algebra textbook says the reason for m is unknown, but remarks that it is interesting that the French word for "to climb" is monter. However, there is no evidence to make any such connection. Descartes, who was French, did not use m. In Mathematical Circles Revisited (1971) mathematics historian Howard W. Eves suggests "it just happened."The Grammarphobia site takes up the issue likewise, citing the above, and mostly knocking down the existing theories as lacking support. They end with this witticism by Howard W. Eves (who taught at my alma mater of U. Maine, although before my time):

When lecturing before an analytic geometry class during the early part of the course... one may say: 'We designate the slope of a line by m, because the word slope starts with the letter m; I know of no better reason.'To bring things full circle, I would point out that the English word "multiplication" is spelled identically in French (and nearly the same in Latin, Italian, Spanish, Portuguese, Danish, Norwegian, and Romanian), so lacking any other historical evidence, I don't see why m for "multiplication" isn't considered as a theory in the sources above. (Compare to k for "koefficient" in Swedish textbooks, per Wolfram.)

2015-12-07

That Time I Didn't Get the Job

Here's a story that I occasionally share with my students. Back around 2001, I had left my second computer gaming job in Boston, and was still interviewing for other jobs in the industry. I had an interview at a company based in Andover, and it was mostly an hour or two in a conference room with one other staff member. I have no recollection who it was now, or if they were in engineering or production. At any rate, part of it was some standard (at the time) "code this simple thing on the whiteboard" tests of basic programming ability.

One of the questions he gave me was "write a function that takes a numeric string and converts it to the equivalent positive integer". Well, it doesn't get much more straightforward than that. I didn't really even think about it, just immediately jotted down something like the following (my C's a bit rusty now, but it was what we were working in at the time; assumes a null-terminated C string):

int parseNumber (char *s) {

int digit, sum = 0;

while (*s) {

digit = *s - '0';

sum = sum * 10 + digit;

s++;

}

return sum;

}

Well, the interviewer jumped on me, saying that's obviously wrong, because it's parsing the digits from left-to-right, whereas place values increase from right-to-left, and therefore the string must be parsed in reverse order. But that's not a problem, as I explained to him, with the way the multiples of 10 are being applied.

I stepped him through an example on the side: say the string is "1234". On the first pass, sum = 0*10+1 = 1; on the second pass, sum = 1*10+2 = 12; on the third pass, sum = 12*10+3 = 123; on the fourth pass, sum = 123*10 + 4 = 1234. Moreover, this is more efficient than the grade-school definition; in this example I've used only 4 multiplications; whereas the elementary expression of 1*10^3 + 2*10^2 + 3*10 + 4 would be using 8 multiplications (and increasingly more multiplications for larger place values; to say nothing of the expense in C of finding the back end of the string first, before knowing which place values are which).

But it was to no avail. No matter how I explained it or stepped through it, my interviewer seemed irreparably skeptical. I left and got no job offer after the fact. The thing is, part of the reason I was so confident and took zero time to think about it is because it's literally a textbook example that was given in my assembly language class in college. From George Markowsky, Real and Imaginary Machines: An Introduction to Assembly Language Programming (p. 105-106):

Postscript: Elsewhere, this can be looked at as an application of Horner's Method for evaluating polynomials, which is provably optimal in terms of minimal operations used (see texts such as: Rosen, Discrete Mathematics, 7th Edition, Sec. 3.3, Exercise 14; and Carrano, Data Abstraction & Problem Solving with C++: Walls and Mirrors, 6th Edition, Sec. 18.4.1).

However, that wasn't the only time I got static for using this textbook algorithm. As of 2019 I've shared this anecdote online at least a handful of times, and every single time, inevitably, someone argues that they wouldn't allow this solution in their codebase because it's too opaque for normal programmers to understand and maintain. (Ironically, I'm writing this postscript on the 200th anniversary of Horner's paper being presented to the Royal Society of London.)

As it turned out, I never worked in computer games or any kind of software engineering again.

One of the questions he gave me was "write a function that takes a numeric string and converts it to the equivalent positive integer". Well, it doesn't get much more straightforward than that. I didn't really even think about it, just immediately jotted down something like the following (my C's a bit rusty now, but it was what we were working in at the time; assumes a null-terminated C string):

int parseNumber (char *s) {

int digit, sum = 0;

while (*s) {

digit = *s - '0';

sum = sum * 10 + digit;

s++;

}

return sum;

}

Well, the interviewer jumped on me, saying that's obviously wrong, because it's parsing the digits from left-to-right, whereas place values increase from right-to-left, and therefore the string must be parsed in reverse order. But that's not a problem, as I explained to him, with the way the multiples of 10 are being applied.

I stepped him through an example on the side: say the string is "1234". On the first pass, sum = 0*10+1 = 1; on the second pass, sum = 1*10+2 = 12; on the third pass, sum = 12*10+3 = 123; on the fourth pass, sum = 123*10 + 4 = 1234. Moreover, this is more efficient than the grade-school definition; in this example I've used only 4 multiplications; whereas the elementary expression of 1*10^3 + 2*10^2 + 3*10 + 4 would be using 8 multiplications (and increasingly more multiplications for larger place values; to say nothing of the expense in C of finding the back end of the string first, before knowing which place values are which).

But it was to no avail. No matter how I explained it or stepped through it, my interviewer seemed irreparably skeptical. I left and got no job offer after the fact. The thing is, part of the reason I was so confident and took zero time to think about it is because it's literally a textbook example that was given in my assembly language class in college. From George Markowsky, Real and Imaginary Machines: An Introduction to Assembly Language Programming (p. 105-106):

Postscript: Elsewhere, this can be looked at as an application of Horner's Method for evaluating polynomials, which is provably optimal in terms of minimal operations used (see texts such as: Rosen, Discrete Mathematics, 7th Edition, Sec. 3.3, Exercise 14; and Carrano, Data Abstraction & Problem Solving with C++: Walls and Mirrors, 6th Edition, Sec. 18.4.1).

However, that wasn't the only time I got static for using this textbook algorithm. As of 2019 I've shared this anecdote online at least a handful of times, and every single time, inevitably, someone argues that they wouldn't allow this solution in their codebase because it's too opaque for normal programmers to understand and maintain. (Ironically, I'm writing this postscript on the 200th anniversary of Horner's paper being presented to the Royal Society of London.)

As it turned out, I never worked in computer games or any kind of software engineering again.

2015-11-30

Short Argument for Tau

Consider the use of tau (τ ~ 6.28) as a more natural unit for circular measures than pi (π ~ 3.14). I have a good colleague at school who counter-argues in this fashion: "But it's only a conversion by a factor of two, which should be trivial for us as mathematicians to deal with. And if our students can't handle that, then perhaps they shouldn't be in college."

Among the possible responses to this, here's a quick one (and specific to part of the curriculum that we teach): Scientific notation is a number written in the format \(a \cdot 10^b\). But imagine if instead we had defined it to be the format \(a \cdot 5^b\). The difference in the base is also only a factor of 2, but consider how much more complicated it is to convert between standard notation and this revised scientific notation.

Lesson: Consider your choice of basis carefully.

Among the possible responses to this, here's a quick one (and specific to part of the curriculum that we teach): Scientific notation is a number written in the format \(a \cdot 10^b\). But imagine if instead we had defined it to be the format \(a \cdot 5^b\). The difference in the base is also only a factor of 2, but consider how much more complicated it is to convert between standard notation and this revised scientific notation.

Lesson: Consider your choice of basis carefully.

2015-11-23

A Bunch of Dumb Things Journalists Say About Pi

A lovely rant by Dave Renfro, via Pat Ballew's blog, here:

2015-11-16

Joyous Excitement

Did you know that this week is the 100th anniversary of Einstein's completion of General Relativity? Specifically it was November 18, 1915 when Einstein drafted a paper that realized the final fix to his theories that would account for the previously unexplainable advance of the perihelion of Mercury. The next week he submitted this paper, "The field equations of gravitation", to the Prussian Academy of Sciences, which included what we now refer to simply as "Einstein's equations".

Einstein later recalled of this seminal moment:

And further:

(Quotes from "General Relativity" by J.J. O'Connor and E.F. Robertson at the School of Mathematics and Statistics, University of St. Andrews, Scotland).

Einstein later recalled of this seminal moment:

For a few days I was beside myself with joyous excitement.

And further:

... in all my life I have not laboured nearly so hard, and I have become imbued with great respect for mathematics, the subtler part of which I had in my simple-mindedness regarded as pure luxury until now.

(Quotes from "General Relativity" by J.J. O'Connor and E.F. Robertson at the School of Mathematics and Statistics, University of St. Andrews, Scotland).

2015-11-09

Measurement Granularity

Answering a question on StackExchange, and I came across some very nice little articles by the Six Sigma system people on Measurement System Analysis:

I like this because this issue comes up a lot in issues of the mathematics of game design: What is the most convenient and efficient scale for a particular system of measurement? And what should we be considering when we mindfully choose those units at the outset?

One key example in my D&D gaming, is that at the outset, units of encumbrance (weight carried) were ludicrously set in tenths-of-a-pound, so tracking gear carried by any characters involves adding up units in the hundreds or thousands, frequently requiring a calculator to do so. As a result, D&D encumbrance is infamous for being almost entirely unusable, and frequently discarded during play. My argument is that this is almost entirely due to an incorrect choice in measurement scale for the task -- equivalent to measuring a daily schedule in seconds, when what you really need is hours. I've recommended for a long time using the flavorfully archaic scale of "stone" weight (i.e., 14-pound units; see here), although the advantage could also be achieved by taking 5- or 10-pound units as the base. Likewise, I have a tendency to defend other Imperial units of weight as being useful in this sense (see: Human scale measurements), although I might be biased just a bit for being so steeped in D&D (further example: a league is about how far one walks in an hour, etc.).

The Six Sigma articles further show a situation where the difference in two production processes is discernible at one scale of measurement, but invisible at another incorrectly-chosen scale of measurement. See more below:

Establishing the adequacy of your measurement system using a measurement system analysis process is fundamental to measuring your own business process capability and meeting the needs of your customer (specifications). Take, for instance, cycle time measurements: It can be measured in seconds, minutes, hours, days, months, years and so on. There is an appropriate measurement scale for every customer need/specification, and it is the job of the quality professional to select the scale that is most appropriate.

I like this because this issue comes up a lot in issues of the mathematics of game design: What is the most convenient and efficient scale for a particular system of measurement? And what should we be considering when we mindfully choose those units at the outset?

One key example in my D&D gaming, is that at the outset, units of encumbrance (weight carried) were ludicrously set in tenths-of-a-pound, so tracking gear carried by any characters involves adding up units in the hundreds or thousands, frequently requiring a calculator to do so. As a result, D&D encumbrance is infamous for being almost entirely unusable, and frequently discarded during play. My argument is that this is almost entirely due to an incorrect choice in measurement scale for the task -- equivalent to measuring a daily schedule in seconds, when what you really need is hours. I've recommended for a long time using the flavorfully archaic scale of "stone" weight (i.e., 14-pound units; see here), although the advantage could also be achieved by taking 5- or 10-pound units as the base. Likewise, I have a tendency to defend other Imperial units of weight as being useful in this sense (see: Human scale measurements), although I might be biased just a bit for being so steeped in D&D (further example: a league is about how far one walks in an hour, etc.).

The Six Sigma articles further show a situation where the difference in two production processes is discernible at one scale of measurement, but invisible at another incorrectly-chosen scale of measurement. See more below:

- Measurement System Analysis Resolution, Granularity

- Proper Data Granularity Allows for Stronger Analysis

- Measurement Systems Analysis in Process Industries

2015-11-02

On Common Core

As people boil the oil and man the ramparts for this decade's education-reform efforts, I've gotten more questions recently about what I think regarding Common Core. Fortunately, I had a chance to look at it recently as part of CUNY's ongoing attempts to refine our algebra remediation and exam structure.

A few opening comments: One, this is purely in regards to the math side of things, and mostly just focused on the area of 6th-8th grade and high school Algebra I that my colleagues and I are largely involved in remediating (see the standards here: http://www.corestandards.org/Math/... and I would highlight the assertion that "Indeed, some of the highest priority content for college and career readiness comes from Grades 6-8.", Note on courses & transitions). Second, we must distinguish what Common Core specifies and what it does not: it does dictate things to know at the end of each grade level, but not how they are to be taught. In general:

I like the balanced requirement to achieve both conceptual understanding and procedural fluency ( http://www.corestandards.org/Math/Practice/). As always, my response in a lot of debates is, "you need both". And this reflects the process of presenting higher-level mathematics theorems: a careful proof, and then applications. The former guarantees correctness and understanding; the latter uses the theorem as a powerful shortcut to get work done more efficiently.

Quick example that I came across last night: "By the end of Grade 3, know from memory all products of two one-digit numbers." (http://www.corestandards.org/Math/Content/3/OA/). That's not nonsense exercise, that's a necessary tool to later understand long division, factoring, fractions, rational versus irrational numbers, estimations, the Fundamental Theorems of Arithmetic and Algebra, etc. I was happy to spot that as a case example. (And I deeply wish that we could depend on all of our college students having that skill.)

I like what I see for sample tests. Here are some examples from the nation-wide PARCC consortium (by Pearson, of course; http://parcc.pearson.com/practice-tests/math/): I'm looking at the 7th- and 8th-grade and Algebra I tests. They all come in two parts: Part I, short questions, multiple-choice, with no calculators allowed. Part II, more sophisticated questions, short-answer (not multiple choice), with calculators allowed. I think that's great: you need both.

New York State writes their own Common Core tests instead of using PARCC, at least at the high school level (http://www.nysedregents.org/): here I'm looking mostly at Algebra I (http://www.nysedregents.org/algebraone/). Again, a nice pattern of one part multiple-choice, the other part short-answer. I wish we could do that in our system. Now, the NYS Algebra I test is all-graphing-calculator mandatory, which sets my teeth on edge a bit compared to the PARCC tests. Maybe I could live with that as long as students have confirmed mental mastery at the 7th- and 8th-grade level (not that I can confirm that they do). Even the grading rubric shown here for NYS looks fine to me (approximately half-credit for calculation, and half-credit for conceptual understanding and approach on any problem; that's pretty close to what I've evolved to do in my own classes).

In summary: Pretty great stuff as far as published standards and test questions (at least for 7th-8th grade math and Algebra I).

Most teachers in grades K-6, and even 7-8 in some places (note that's specifically the key grades highlighted above for "some of the highest priority content for college and career readiness") are not mathematics specialists. In fact, U.S. education school entrants are perennially the very weakest of all incoming college students in proficiency and attitude towards math (also: here). If the teachers at these levels fundamentally don't understand math themselves -- don't understand the later algebra and STEM work that it prepares them for -- then I have a really tough time seeing how they can understand the Common Core requirements, or effectively select and implement appropriate mathematical curriculum for their classrooms. Sometimes I refer to students at this level as having "anti-knowledge" -- and I find that it's much easier to instruct a student who has never heard of algebra ever (which sometimes happens for graduates of certain religious programs) than it is to deconstruct and repair incorrect the conceptual frameworks of students with many years of broken instruction.

Before I go on: The best solution to this would be to massively increase salary and benefits for all public-school teachers, and implement top-notch rigorous requirements for entry to education programs (as done in other top-performing nations). A second-best solution, which is probably more feasible in the near-term, would be to place mathematics-specialist teachers in all grades K-12.

The other key problem I see is: how are the test scores generated? We already know that in many places students take tests, and then the test scores are arbitrarily inflated or scaled by the state institutions, manipulating them to guarantee some particular high percentage is deemed "passing" (regardless of actual proficiency, for political purposes). For example, the conversion chart for NYS Algebra I Common Core raw scores to final scores for this past August is shown below (from NYS regents link above):

Now, this is a test that had a maximum total 86 possible points scored. If we linearly converted this to a percentage, we would just multiply any score by 100/86 = 1.16; it would add 14 points at the top of the scale, about 7 points at the middle, and 0 points at the bottom. But that's not what we see here -- it's a nonlinear scaling from raw to final. The top adds 14 points, but in the middle it adds 30 or more points in the raw range from 13 to 40.

The final range is 0 to 100, allowing you to think it might be a percentage, but it's not. If we consider 60% be minimal normal passing at a test, for this test that would occur at the 52-point raw score mark; but that gets scaled to a 73 final score, which usually means a middle-C grade. Looking at the 5 performance levels (more-or-less equivalent to A- through F- letter grades): A performance level of "3" is achieved with a raw score of just 30, which is only 30/86 = 35% of the available points on the test. A performance level of "2" is achieved with a raw score of only 20, that is, 20/86 = 23% of the available points on the test. And these low levels (near random-guessing) are considered acceptable for awards of a high school diploma (www.p12.nysed.gov/assessment/reports/commoncore/tr-a1-ela.pdf, p. 19):

In summary: While the publicized standards and exam formats look fine to me, the devil is in the details. On the input end, actual curriculum and instruction are left as undefined behavior in the hands of primary-school teachers who are not specialists, and rarely empowered, and frequently the very weakest of all professionals in math skills and understanding. And on the output end, grading scales can be manipulated arbitrarily to show any desired passing rate, almost entirely disconnected from the actual level of mastery demonstrated in a cohort of students. So I fear that almost any number of students can go through a system like that and not actual meet the published Common Core standards to be ready for work in college or a career.

A few opening comments: One, this is purely in regards to the math side of things, and mostly just focused on the area of 6th-8th grade and high school Algebra I that my colleagues and I are largely involved in remediating (see the standards here: http://www.corestandards.org/Math/... and I would highlight the assertion that "Indeed, some of the highest priority content for college and career readiness comes from Grades 6-8.", Note on courses & transitions). Second, we must distinguish what Common Core specifies and what it does not: it does dictate things to know at the end of each grade level, but not how they are to be taught. In general:

The standards establish what students need to learn, but they do not dictate how teachers should teach. Teachers will devise their own lesson plans and curriculum, and tailor their instruction to the individual needs of the students in their classrooms. (Frequently Asked Questions: What guidance do the Common Core Standards provide to teachers?)Specifically in regards to math:

The standards themselves do not dictate curriculum, pedagogy, or delivery of content. (Note on courses & transitions)So this foreshadows a two-part answer:

(1) I think the standards look great.

Everything that I've seen in the standards themselves looks smart, rigorous, challenging, core to the subject, and pretty much indispensable to a traditional college curriculum in calculus, statistics, computer programming, and other STEM pursuits. I encourage you to read them at the link above. It includes pretty much everything in a standard algebra sequence for the last few centuries or so.I like the balanced requirement to achieve both conceptual understanding and procedural fluency ( http://www.corestandards.org/Math/Practice/). As always, my response in a lot of debates is, "you need both". And this reflects the process of presenting higher-level mathematics theorems: a careful proof, and then applications. The former guarantees correctness and understanding; the latter uses the theorem as a powerful shortcut to get work done more efficiently.

Quick example that I came across last night: "By the end of Grade 3, know from memory all products of two one-digit numbers." (http://www.corestandards.org/Math/Content/3/OA/). That's not nonsense exercise, that's a necessary tool to later understand long division, factoring, fractions, rational versus irrational numbers, estimations, the Fundamental Theorems of Arithmetic and Algebra, etc. I was happy to spot that as a case example. (And I deeply wish that we could depend on all of our college students having that skill.)

I like what I see for sample tests. Here are some examples from the nation-wide PARCC consortium (by Pearson, of course; http://parcc.pearson.com/practice-tests/math/): I'm looking at the 7th- and 8th-grade and Algebra I tests. They all come in two parts: Part I, short questions, multiple-choice, with no calculators allowed. Part II, more sophisticated questions, short-answer (not multiple choice), with calculators allowed. I think that's great: you need both.

New York State writes their own Common Core tests instead of using PARCC, at least at the high school level (http://www.nysedregents.org/): here I'm looking mostly at Algebra I (http://www.nysedregents.org/algebraone/). Again, a nice pattern of one part multiple-choice, the other part short-answer. I wish we could do that in our system. Now, the NYS Algebra I test is all-graphing-calculator mandatory, which sets my teeth on edge a bit compared to the PARCC tests. Maybe I could live with that as long as students have confirmed mental mastery at the 7th- and 8th-grade level (not that I can confirm that they do). Even the grading rubric shown here for NYS looks fine to me (approximately half-credit for calculation, and half-credit for conceptual understanding and approach on any problem; that's pretty close to what I've evolved to do in my own classes).

In summary: Pretty great stuff as far as published standards and test questions (at least for 7th-8th grade math and Algebra I).

(2) The implementation is possibly suspect.

Having established rigorous standards and examinations, these don't solve some of the endemic problems in our primary education system. Granted that "Teachers will devise their own lesson plans and curriculum, and tailor their instruction to the individual needs of the students in their classrooms." (above):Most teachers in grades K-6, and even 7-8 in some places (note that's specifically the key grades highlighted above for "some of the highest priority content for college and career readiness") are not mathematics specialists. In fact, U.S. education school entrants are perennially the very weakest of all incoming college students in proficiency and attitude towards math (also: here). If the teachers at these levels fundamentally don't understand math themselves -- don't understand the later algebra and STEM work that it prepares them for -- then I have a really tough time seeing how they can understand the Common Core requirements, or effectively select and implement appropriate mathematical curriculum for their classrooms. Sometimes I refer to students at this level as having "anti-knowledge" -- and I find that it's much easier to instruct a student who has never heard of algebra ever (which sometimes happens for graduates of certain religious programs) than it is to deconstruct and repair incorrect the conceptual frameworks of students with many years of broken instruction.

Before I go on: The best solution to this would be to massively increase salary and benefits for all public-school teachers, and implement top-notch rigorous requirements for entry to education programs (as done in other top-performing nations). A second-best solution, which is probably more feasible in the near-term, would be to place mathematics-specialist teachers in all grades K-12.

The other key problem I see is: how are the test scores generated? We already know that in many places students take tests, and then the test scores are arbitrarily inflated or scaled by the state institutions, manipulating them to guarantee some particular high percentage is deemed "passing" (regardless of actual proficiency, for political purposes). For example, the conversion chart for NYS Algebra I Common Core raw scores to final scores for this past August is shown below (from NYS regents link above):

Now, this is a test that had a maximum total 86 possible points scored. If we linearly converted this to a percentage, we would just multiply any score by 100/86 = 1.16; it would add 14 points at the top of the scale, about 7 points at the middle, and 0 points at the bottom. But that's not what we see here -- it's a nonlinear scaling from raw to final. The top adds 14 points, but in the middle it adds 30 or more points in the raw range from 13 to 40.

The final range is 0 to 100, allowing you to think it might be a percentage, but it's not. If we consider 60% be minimal normal passing at a test, for this test that would occur at the 52-point raw score mark; but that gets scaled to a 73 final score, which usually means a middle-C grade. Looking at the 5 performance levels (more-or-less equivalent to A- through F- letter grades): A performance level of "3" is achieved with a raw score of just 30, which is only 30/86 = 35% of the available points on the test. A performance level of "2" is achieved with a raw score of only 20, that is, 20/86 = 23% of the available points on the test. And these low levels (near random-guessing) are considered acceptable for awards of a high school diploma (www.p12.nysed.gov/assessment/reports/commoncore/tr-a1-ela.pdf, p. 19):

In summary: While the publicized standards and exam formats look fine to me, the devil is in the details. On the input end, actual curriculum and instruction are left as undefined behavior in the hands of primary-school teachers who are not specialists, and rarely empowered, and frequently the very weakest of all professionals in math skills and understanding. And on the output end, grading scales can be manipulated arbitrarily to show any desired passing rate, almost entirely disconnected from the actual level of mastery demonstrated in a cohort of students. So I fear that almost any number of students can go through a system like that and not actual meet the published Common Core standards to be ready for work in college or a career.

2015-10-26

Double Factorial Table

The double factorial is the product of a number and every second natural number less than itself. That is:

Presentation of the values for double factorials is usually split up into separate even- and odd- sequences. Instead, I wanted to see the sequence all together, as below:

\(n!! = \prod_{k = 0}^{ \lceil n/2 \rceil - 1} (n - 2k) = n(n-2)(n-4)...\)

Presentation of the values for double factorials is usually split up into separate even- and odd- sequences. Instead, I wanted to see the sequence all together, as below:

2015-10-19

Geometry Formulas in Tau

Here's a modified a geometry formula sheet so all the presentations of circular shapes are in terms of tau (not pi); tack it to your wall and see if anybody spots the difference.

(Original sheet here.)

(Original sheet here.)

2015-10-12

On Zeration

In my post last week on hyperoperations, I didn't talk much about the operation under addition, the zero-th operation in the hierarchy, which many refer to as "zeration". There is a surprising amount of disagreement about exactly how zeration should be defined.

The standard Peano axioms defining the natural numbers stipulate a single operation called the "successor". This is commonly written S(n), which indicates the next natural number after n. Later on, addition is defined in terms of repeated successor operations, and so forth.

The traditional definition of zeration, per Goodstein, is: \(H_0(a, b) = b + 1\). Now when I first saw this, I was surprised and taken aback. All the other operations start with \(a\) as a "base", and then effectively apply some simpler operation \(b\) times, so it seems odd to start with the \(b\) and just add one to it. (If anything my expectation would have been to take \(a+1\), but that doesn't satisfy the regular recursive definition of \(H_n\) when you try to construct addition.)

As it turns out, when you get to this basic level, you're doomed to lose many of the regular properties of the operations hierarchy. So there's nothing to do but start arguing about which properties to prioritize as "most fundamental" when constructing the definition.

Here are some points in favor of the standard definition \(b+1\): (1) It does satisfy the recursive formula that repeated applications are equivalent to addition (\(H_1\)). (2) It does looking passingly like counting by 1, i.e., the Peano "successor" operation. (3) It shares the key identity that \(H_n(a, 0) = 1\), for all \(n \ge 3\). (4) Since it is an elementary operation (addition, really), it can be extended from natural numbers to all real and complex numbers in a fashion which is analytic (infinitely differentiable).

But here are some points against the standard definition (1) It is not "really" a binary operator like the rest of the hierarchy, in that it totally ignores the first parameter \(a\). (2) Because of its ignoring \(a\), it's not commutative like the other low-level operations n = 1 or 2 (yet like them it is still associative and distributive, or as I sometimes say, collective of the next higher operation). (3) For the same reason, it has no identity element (no way to recover the value \(a\), unique among the entire hyperoperations hierarchy). (4) It's the only hyperoperation which doesn't need a special base case for when \(b = 0\). (5) I might turn around favorable point #3 above and call it weird and unfavorable, in that it is misaligned in this way with operations n = 1 and 2, and it's the only case of one of the key identities being added at a lower level instead of being lost. See how weird that looks below?

So as a result, a variety of alternative definitions have been put forward. I think my favorite is \(H_0(a, b) = max(a, b) + 1\). Again, this looks a lot like counting; I might possibly explain it to a young student as "count one more than the largest number you've seen before". Points in favor: (1) Repeated applications are again the same as addition. (2) It is truly a binary operation. (3) It is commutative, and thus completes the trifecta of commutativity, association, and distribution/collection being true for all operations \(n < 3\). (4) It does have an identity element, in \(b = 0\). (5) It maintains the pattern of losing more of the high-level identities, and in fact perfects the situation in that none of the five identities hold for this zeration (all "no's" in the modified table above for \(n = 0\)). Points against: (1) It isn't exactly the same as the unary Peano successor function. (2) It's non-differentiable, and therefore cannot be extended to an analytic function over the fields of real or complex numbers.

There are vocal proponents of related possible re-definition: \(H_0(a, b) = max(a, b) + 1\) if a ≠ b, \(a + 2\) if a = b. Advantage here is that it matches some identities in other operations, like \(H_n(a, a) = H_{n+1}(a, 2)\) and \(H_n(2, 2) = 4\), but I'm less impressed by specific magic numbers like that (as compared to having commutativity and the pattern of actually losing more identities). Disadvantage is obviously that the possibility of adding 2 in the \(a+2\) case gets us even further away from the simple Peano successor function.

And then some people want to establish commutativity so badly that they assert this: \(H_0(a, b) = ln(e^a + e^b)\). That does get you commutativity, but at that point we're so far away from simple counting in natural numbers that I don't even want to think about it.

Final thought: While most people interpret the standard definition of zeration, \(H_0(a, b) = b + 1\) as "counting 1 more place from b", it makes more sense to my brain to turn that around and say that we are "counting b places from 1". That is, ignoring the \(a\) parameter, start at the number 1 and apply the successor function repeatedly b times: \(S(S(S(...S(1))))\), with the \(S\) function appearing \(b\) times. This feels more like "basic" Peano counting, it maintains the sense of \(b\) being the number of times some simpler operation is applied, and it avoids defining zeration in terms of the higher operation of addition. And then you also need to stipulate a special base case for \(b = 0\), like all the other hyperoperations, namely \(H_0(a, 0) = 1\).

So maybe the standard definition is the best we can do, and the closest expression of what Peano successor'ing in natural numbers (counting) really indicates. Perhaps we can't really have a "true" binary operator at level \(H_0\), at a point when we haven't even discovered what the number "2" is yet.

P.S. Can we consider defining an operation one level even lower, perhaps \(H_{-1}(a, b) = 1\) which ignores both parameters, just returns the natural number 1, and loses every single one of the regular properties of hyperoperations (including recursivity in the next one up)?

The standard Peano axioms defining the natural numbers stipulate a single operation called the "successor". This is commonly written S(n), which indicates the next natural number after n. Later on, addition is defined in terms of repeated successor operations, and so forth.

The traditional definition of zeration, per Goodstein, is: \(H_0(a, b) = b + 1\). Now when I first saw this, I was surprised and taken aback. All the other operations start with \(a\) as a "base", and then effectively apply some simpler operation \(b\) times, so it seems odd to start with the \(b\) and just add one to it. (If anything my expectation would have been to take \(a+1\), but that doesn't satisfy the regular recursive definition of \(H_n\) when you try to construct addition.)

As it turns out, when you get to this basic level, you're doomed to lose many of the regular properties of the operations hierarchy. So there's nothing to do but start arguing about which properties to prioritize as "most fundamental" when constructing the definition.

Here are some points in favor of the standard definition \(b+1\): (1) It does satisfy the recursive formula that repeated applications are equivalent to addition (\(H_1\)). (2) It does looking passingly like counting by 1, i.e., the Peano "successor" operation. (3) It shares the key identity that \(H_n(a, 0) = 1\), for all \(n \ge 3\). (4) Since it is an elementary operation (addition, really), it can be extended from natural numbers to all real and complex numbers in a fashion which is analytic (infinitely differentiable).

But here are some points against the standard definition (1) It is not "really" a binary operator like the rest of the hierarchy, in that it totally ignores the first parameter \(a\). (2) Because of its ignoring \(a\), it's not commutative like the other low-level operations n = 1 or 2 (yet like them it is still associative and distributive, or as I sometimes say, collective of the next higher operation). (3) For the same reason, it has no identity element (no way to recover the value \(a\), unique among the entire hyperoperations hierarchy). (4) It's the only hyperoperation which doesn't need a special base case for when \(b = 0\). (5) I might turn around favorable point #3 above and call it weird and unfavorable, in that it is misaligned in this way with operations n = 1 and 2, and it's the only case of one of the key identities being added at a lower level instead of being lost. See how weird that looks below?

So as a result, a variety of alternative definitions have been put forward. I think my favorite is \(H_0(a, b) = max(a, b) + 1\). Again, this looks a lot like counting; I might possibly explain it to a young student as "count one more than the largest number you've seen before". Points in favor: (1) Repeated applications are again the same as addition. (2) It is truly a binary operation. (3) It is commutative, and thus completes the trifecta of commutativity, association, and distribution/collection being true for all operations \(n < 3\). (4) It does have an identity element, in \(b = 0\). (5) It maintains the pattern of losing more of the high-level identities, and in fact perfects the situation in that none of the five identities hold for this zeration (all "no's" in the modified table above for \(n = 0\)). Points against: (1) It isn't exactly the same as the unary Peano successor function. (2) It's non-differentiable, and therefore cannot be extended to an analytic function over the fields of real or complex numbers.

There are vocal proponents of related possible re-definition: \(H_0(a, b) = max(a, b) + 1\) if a ≠ b, \(a + 2\) if a = b. Advantage here is that it matches some identities in other operations, like \(H_n(a, a) = H_{n+1}(a, 2)\) and \(H_n(2, 2) = 4\), but I'm less impressed by specific magic numbers like that (as compared to having commutativity and the pattern of actually losing more identities). Disadvantage is obviously that the possibility of adding 2 in the \(a+2\) case gets us even further away from the simple Peano successor function.

And then some people want to establish commutativity so badly that they assert this: \(H_0(a, b) = ln(e^a + e^b)\). That does get you commutativity, but at that point we're so far away from simple counting in natural numbers that I don't even want to think about it.

Final thought: While most people interpret the standard definition of zeration, \(H_0(a, b) = b + 1\) as "counting 1 more place from b", it makes more sense to my brain to turn that around and say that we are "counting b places from 1". That is, ignoring the \(a\) parameter, start at the number 1 and apply the successor function repeatedly b times: \(S(S(S(...S(1))))\), with the \(S\) function appearing \(b\) times. This feels more like "basic" Peano counting, it maintains the sense of \(b\) being the number of times some simpler operation is applied, and it avoids defining zeration in terms of the higher operation of addition. And then you also need to stipulate a special base case for \(b = 0\), like all the other hyperoperations, namely \(H_0(a, 0) = 1\).

So maybe the standard definition is the best we can do, and the closest expression of what Peano successor'ing in natural numbers (counting) really indicates. Perhaps we can't really have a "true" binary operator at level \(H_0\), at a point when we haven't even discovered what the number "2" is yet.

P.S. Can we consider defining an operation one level even lower, perhaps \(H_{-1}(a, b) = 1\) which ignores both parameters, just returns the natural number 1, and loses every single one of the regular properties of hyperoperations (including recursivity in the next one up)?

2015-10-05

On Hyperoperations

Consider the basic operations: Repeated counting is addition; repeated addition is multiplication; repeated multiplication is exponentiation. Hyperoperations are the idea of generally extending this sequence. This was first proposed as such, in a passing comment, by R. L. Goodstein in an article to the Journal of Symbolic Logic, "Transfinite Ordinals in Recursive Number Theory" (1947):

At this point, there are a lot of different ways of denoting these operations. There's \(H_n\) notation. There's the Knuth up-arrow notation. There's box notation and bracket notation. The Ackerman function means almost the same thing. Conway's chained arrow notation can be used to show them. Some people concisely symbolize the zero-th level operation (under addition) as \(a \circ b\), and the fourth operation (above exponentiation) as \(a \# b\). Wikipedia reiterates Goodstein's original definition like so, for \(H_n (a,b): (\mathbb N_0)^3 \to \mathbb N_0\):

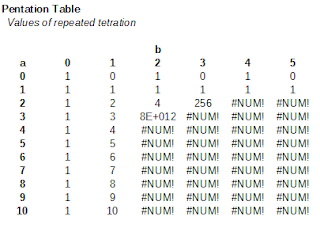

Let's use Goodstein's suggested names for the levels above exponentiation. Repeated exponentiation is tetration; repeated tetration is pentation; repeated pentation is hexation; and so forth. Since I don't see them anywhere else online, below you'll find some partial hyperproduct tables for these next-level operations (and ODS spreadsheet here). Of course, the values get large very fast; you'll see some entries in scientific notation, and then "#NUM!" indicates a place where my spreadsheet could no longer handle the value (that is, something greater than \(1 \times 10^{308}\)).

From this point forward, the hyperoperation tables look passingly similar in this limited view. You have some fixed values in the first two rows and columns; the 2-by-2 result is eternally 4; and everything other than that is so astronomically huge that you can't even usefully write it in scientific notation. Here are some identities suggested above that we can prove pretty easily for all hyperoperations \(n > 3\):

Now, to connect up to my post last week, recall the basic properties of real numbers taken as axiomatic at the start of most algebra and analysis classes. Addition and multiplication (n = 1 and 2) are commutative and associative; but exponents are not, and neither are any of the higher operations.

Finally consider the general case of distribution, what in my algebra classes I summarize as the "General Distribution Rule" (Principle #2 here). Or perhaps based on last week's observation I might suggest it could be better phrased as "collecting terms of the next higher operation", like \(ac + bc = (a+b)c\) and \(a^c \cdot b^c = (a \cdot b)^c\), or in the general hyperoperational form:

Well, just like commutativity and associativity, distribution in this general form also holds for n = 1 and 2, but fails for higher operations. Here's the first counterexample, using \(a \uparrow b\) for exponents (\(H_3\)), and \(a \# b\) for tetration (\(H_4\)):

Likewise, what I call the "Fundamental Rules of Exponents" (Principle #1 above, or also here) works only for levels \(n \le 3\), and fails to be meaningful at higher levels of hyperoperation.

At this point, there are a lot of different ways of denoting these operations. There's \(H_n\) notation. There's the Knuth up-arrow notation. There's box notation and bracket notation. The Ackerman function means almost the same thing. Conway's chained arrow notation can be used to show them. Some people concisely symbolize the zero-th level operation (under addition) as \(a \circ b\), and the fourth operation (above exponentiation) as \(a \# b\). Wikipedia reiterates Goodstein's original definition like so, for \(H_n (a,b): (\mathbb N_0)^3 \to \mathbb N_0\):

Let's use Goodstein's suggested names for the levels above exponentiation. Repeated exponentiation is tetration; repeated tetration is pentation; repeated pentation is hexation; and so forth. Since I don't see them anywhere else online, below you'll find some partial hyperproduct tables for these next-level operations (and ODS spreadsheet here). Of course, the values get large very fast; you'll see some entries in scientific notation, and then "#NUM!" indicates a place where my spreadsheet could no longer handle the value (that is, something greater than \(1 \times 10^{308}\)).

From this point forward, the hyperoperation tables look passingly similar in this limited view. You have some fixed values in the first two rows and columns; the 2-by-2 result is eternally 4; and everything other than that is so astronomically huge that you can't even usefully write it in scientific notation. Here are some identities suggested above that we can prove pretty easily for all hyperoperations \(n > 3\):

- \(H_n(a, 0) = 1\) (by definition)

- \(H_n(a, 1) = a\)

- \(H_n(0, b) = \) 0 if b odd, 1 if b even

- \(H_n(1, b) = 1\)

- \(H_n(2, 2) = 4\)

Now, to connect up to my post last week, recall the basic properties of real numbers taken as axiomatic at the start of most algebra and analysis classes. Addition and multiplication (n = 1 and 2) are commutative and associative; but exponents are not, and neither are any of the higher operations.

Finally consider the general case of distribution, what in my algebra classes I summarize as the "General Distribution Rule" (Principle #2 here). Or perhaps based on last week's observation I might suggest it could be better phrased as "collecting terms of the next higher operation", like \(ac + bc = (a+b)c\) and \(a^c \cdot b^c = (a \cdot b)^c\), or in the general hyperoperational form:

\(H_n(H_{n+1}(a, c), H_{n+1}(b, c)) = H_{n+1}(H_n(a, b), c)\)

Well, just like commutativity and associativity, distribution in this general form also holds for n = 1 and 2, but fails for higher operations. Here's the first counterexample, using \(a \uparrow b\) for exponents (\(H_3\)), and \(a \# b\) for tetration (\(H_4\)):

\((2\#2)\uparrow (0\#2) = 4 \uparrow 1 = 4\), but

\((2 \uparrow 0)\#2 = 1 \# 2 = 1\).

Likewise, what I call the "Fundamental Rules of Exponents" (Principle #1 above, or also here) works only for levels \(n \le 3\), and fails to be meaningful at higher levels of hyperoperation.

2015-09-28

Why Is Distribution Prioritized Over Combining?

So I've come up with this question that's been bothering me for weeks,and I've been searching and asking everyone and everywhere that I can. I suspect that it may have no answer. The question is this:

In dimensional analysis, some call the idea of only adding or comparing like units the "Great Principle of Similitude". Which provides some of my motivation for wishing that we would start with this ("combining") and then derive distribution (using commutativity a few times). Note that this phrase is in many places erroneously attributed to Newton; in truth the earliest documented usage of the phrase is by Rayleigh in a letter to Nature (No. 2368, Vol. 95; March 18, 1915). I could probably write a whole post just on the hunt for this quote. Big thanks to Juan Santiago who teaches a class by that name at Stanford (link) for helping me track down the article.

The Math Forum at Drexel discusses some history of the names of the basic properties. The best that Doctor Peterson can track down is that terms such as "distribution" were first used in the late 1700's to 1800's (starting in French in a memoir by Francois Joseph Servois). No commentary on a reason for why this was picked over alternative formulations. But perhaps the fact that the original discussion was in terms of functions (not binary operators) provides a clue. (For the full French text, see here and search "commutative and distributive").

Here's me asking the question at the StackExchange Mathematics site. Unfortunately, most commentators considered it to be uninteresting. When it got no responses, I cross-posted to the Mathematics Educators site -- which is apparently a huge faux pas, and immediately got it down-moderated into oblivion. The only relevant answer to date was from Benjamin Dickman, who pointed to a very nice quote from Euclid: when he states a similar property in geometric terms (area of a rectangle versus the sum of it being sliced up), it happens to be in the same order as we present the distribution property. But still no word on any reason why it should be in that order and not the reverse.

Observations from a few textbooks that I have lying around:

Another thought is that while you can point to distribution as justifying the standard long-multiplication process (across decimal place value), the interior additions are implied and not explicit, and so they don't really serve to develop intuition in the same way that simple unit addition does.

Therefore, I find myself fantasizing about the following. Write a slightly nonstandard algebra textbook that starts by assuming commutativity, association, and the combining-like-terms-property (and shortly after deriving the distribution property). Perhaps for a better name it could be called "collection of like multiplications inside addition", or something like that.

Do you think this would be a better set of axioms for a basic algebra class? Can you think of a solid historical or pedagogical reason why the name and presentation were not the other way around, like this? Likely some more on this later.

Consider the properties of real numbers that we take for granted at the start of an algebra or analysis class (commutativity, association, and distribution of multiplying over addition). Granted that the last one, distribution (the transformation \(a(b+c) = ab + ac\)), is effectively equivalent to what we might call "combining like terms" (the transformation \(ax + bx = (a+b)x\)). It seems like the latter is more fundamental and easier to intuit as an axiom, since it resembles simple addition of units (e.g., 3 feet + 5 feet = 8 feet). So historically and/or pedagogically, what was the reason choosing the name and order we have ("distribution", \(a(b+c)=ab+ac\)), instead of the other option ("combining", \(ax+bx = (a+b)x\)) for the starting axiom?I suspect now that there simply isn't any reason that we can document. Some expansion on the problem:

In dimensional analysis, some call the idea of only adding or comparing like units the "Great Principle of Similitude". Which provides some of my motivation for wishing that we would start with this ("combining") and then derive distribution (using commutativity a few times). Note that this phrase is in many places erroneously attributed to Newton; in truth the earliest documented usage of the phrase is by Rayleigh in a letter to Nature (No. 2368, Vol. 95; March 18, 1915). I could probably write a whole post just on the hunt for this quote. Big thanks to Juan Santiago who teaches a class by that name at Stanford (link) for helping me track down the article.

The Math Forum at Drexel discusses some history of the names of the basic properties. The best that Doctor Peterson can track down is that terms such as "distribution" were first used in the late 1700's to 1800's (starting in French in a memoir by Francois Joseph Servois). No commentary on a reason for why this was picked over alternative formulations. But perhaps the fact that the original discussion was in terms of functions (not binary operators) provides a clue. (For the full French text, see here and search "commutative and distributive").

Here's me asking the question at the StackExchange Mathematics site. Unfortunately, most commentators considered it to be uninteresting. When it got no responses, I cross-posted to the Mathematics Educators site -- which is apparently a huge faux pas, and immediately got it down-moderated into oblivion. The only relevant answer to date was from Benjamin Dickman, who pointed to a very nice quote from Euclid: when he states a similar property in geometric terms (area of a rectangle versus the sum of it being sliced up), it happens to be in the same order as we present the distribution property. But still no word on any reason why it should be in that order and not the reverse.

Observations from a few textbooks that I have lying around:

- Rietz and Crathorne, Introductory College Algebra (1933). Article 4 shows combining like terms, and asserts that's justified by the associative law (which is nonsensical). The distributive property isn't presented until later, in Article 8.

- Martin-Gay, Prealgebra & Introductory Algebra. In the purely numerical prealgebra section, this first shows up as distribution among numbers (Sec 1.5). But the first time it appears in the algebra section with variables it is in fact written and used for combining like terms (Sec 3.1: \(ac + bc = (a+b)c\), although still called the "distributive property"). Combining like terms is actually done even earlier than that on an intuitive basis (see Sec. 2.6, Example 4). Only later is the property presented and used to remove parentheses from variable expressions.

- Bittinger's Intermediate Algebra shows standard distribution, followed immediately by use for combining like terms. Sullivan's Algebra & Trigonometry does the same.

Another thought is that while you can point to distribution as justifying the standard long-multiplication process (across decimal place value), the interior additions are implied and not explicit, and so they don't really serve to develop intuition in the same way that simple unit addition does.

Therefore, I find myself fantasizing about the following. Write a slightly nonstandard algebra textbook that starts by assuming commutativity, association, and the combining-like-terms-property (and shortly after deriving the distribution property). Perhaps for a better name it could be called "collection of like multiplications inside addition", or something like that.

Do you think this would be a better set of axioms for a basic algebra class? Can you think of a solid historical or pedagogical reason why the name and presentation were not the other way around, like this? Likely some more on this later.

2015-09-21

Rational Numbers and Randomized Digits

Here's a quick thought experiment to develop intuition about the cardinality of rational versus irrational decimal numbers. We know that any rational number (a/b with integer a, b and b ≠ 0) has a decimal expansion that either terminates or repeats (and terminating is itself equivalent to ending with a repeating block of all 0's).

Consider randomizing decimal digits in an infinite string (say, by using a standard d10 from a roleplaying game, shown above). How likely does it seem that at any point you'll start rolling repeated 0's, and nothing but 0's, until the end of time? It's obviously diminishingly unlikely, so effectively impossible that you'll roll a terminating decimal. Alternatively, how probable does it seem that you'll roll some particular block of digits, and then repeat them in exactly the same order, and keep doing so without fail an infinite number of times? Again, it seems effectively impossible.

So this intuitively shows that if you pick any real number "at random" (in this case, generating random decimal digits one at a time), it's effectively certain that you'll produce an irrational number. The proportion of rational numbers can be seen to be practically negligible compared to the preponderance of irrationals.

Consider randomizing decimal digits in an infinite string (say, by using a standard d10 from a roleplaying game, shown above). How likely does it seem that at any point you'll start rolling repeated 0's, and nothing but 0's, until the end of time? It's obviously diminishingly unlikely, so effectively impossible that you'll roll a terminating decimal. Alternatively, how probable does it seem that you'll roll some particular block of digits, and then repeat them in exactly the same order, and keep doing so without fail an infinite number of times? Again, it seems effectively impossible.

So this intuitively shows that if you pick any real number "at random" (in this case, generating random decimal digits one at a time), it's effectively certain that you'll produce an irrational number. The proportion of rational numbers can be seen to be practically negligible compared to the preponderance of irrationals.

2015-09-14

Algebra for Cryptography

Cryptography researcher Victor Shoup recently gave a talk at the Simons Institute at Berkeley. Richard Lipton quotes him in one of his interesting observations about cryptography:

He also made another point: For the basic type of systems under discussion, he averred that the mathematics needed to describe and understand them was essentially high school algebra. Or as he said, “at least high school algebra outside the US.”Quoted here.

2015-09-07

The MOOC Revolution that Wasn't

Three years ago I wrote a review of "Udacity Statistics 101" that went

semi-viral, finding the MOOC course to be slapdash, unplanned, and in

many cases pure nonsense (link). I wound up personally corresponding with

Sebastian Thrun (Stanford professor, founder of Udacity, head of

Google's auto-car project) over it, and came away super skeptical of his

work. Today here's a fantastic article about the fallen hopes for MOOCs

and Thrun's Udacity in particular -- highly recommended, jealous that I

didn't write this.

Just a few short years after promising higher education for anyone with an Internet connection, MOOCs have scaled back their ambitions, content to become job training for the tech sector and for students who already have college degrees...I want to quote the whole thing here; probably best that you just go and read it. Big kudos to Audrey Waters for writing this (and tip to Cathy O'Neil for sharing a link).

"In 50 years,” Thrun told Wired, “there will be only 10 institutions in the world delivering higher education and Udacity has a shot at being one of them.”

Three years later, Thrun and the other MOOC startup founders are now telling a different story. The latest tagline used by Thrun to describe his company: “Uber for Education.”

2015-08-24

On Registered Clinical Trials

A new PLoS ONE study looks at the effect of mandatory pre-registration of medical study methods and outcome measures, starting in 2000. Major findings:

- Studies finding positive effects fell from 57% prior to the registry to just 8% afterward.

- "...focused on human randomized controlled trials that were funded by the US National Heart, Lung, and Blood Institute (NHLBI) [and so required advanced registration by a 1997 U.S. law]. The authors conclude that registration of trials seemed to be the dominant driver of the drastic change in study results."

- "Steven Novella of Yale University in New Haven, Connecticut, called the study 'encouraging' but also 'a bit frightening' because it casts doubt on previous positive results...”

- "Many online observers applauded the evident power of registration and transparency, including Novella, who wrote on his blog that all research involving humans should be registered before any data are collected. However, he says, this means that at least half of older, published clinical trials could be false positives. 'Loose scientific methods are leading to a massive false positive bias in the literature,' he writes."

2015-08-16

Is Cohabitation Good for You?

Last week, Ars Technica (and I'm sure other news sites) posted an article on a large-scale survey of health outcomes in Britain, under the headline, "Good news for unmarried couples — cohabitation is good for you" (subtitle: "Married partners tend to be healthy, but living with someone works just as well"). Link.

I'm actually hyper-critical about people who sling around the phrase "correlation does not imply causation" too much in improper cases, but here's a golden example where it does apply; the headline "cohabitation is good for you", is totally unwarranted. Now, the findings do say that married & cohabiting people are healthier than people who live alone. But this could be either X causes Y, or Y causes X, or other more complicated interactions. One hypothesis is that "cohabitation is good for you [by improving health]"; another hypothesis is that "being healthy is good for your prospects of getting a partner", i.e., healthy people make for more attractive marriage/cohabitation partners. If you think about it, I'd say that the latter is actually the more common-sense direction of the causation here.

I'm actually hyper-critical about people who sling around the phrase "correlation does not imply causation" too much in improper cases, but here's a golden example where it does apply; the headline "cohabitation is good for you", is totally unwarranted. Now, the findings do say that married & cohabiting people are healthier than people who live alone. But this could be either X causes Y, or Y causes X, or other more complicated interactions. One hypothesis is that "cohabitation is good for you [by improving health]"; another hypothesis is that "being healthy is good for your prospects of getting a partner", i.e., healthy people make for more attractive marriage/cohabitation partners. If you think about it, I'd say that the latter is actually the more common-sense direction of the causation here.

2015-07-20

The Difference a Teacher Makes

I had an interesting natural experiment this past spring semester: I was teaching two remedial algebra courses, one in the afternoon, and one in the evening. Same calendar days, same class sizes, identical lectures from day to day, exact same tests, exact same numbers taking the final exam. In one class, only 23% of the registrants passed the final exam, while in the other class 60% passed. (Median scores on the final were 48% in one class and 80% in the other.)

This got me to wondering: How much difference does the teacher make in these classes? And the honest answer is: not very much. To be humble about it, I could do everything humanly possible both inside and outside the classroom as a teacher, work at maximal effort all the time (and that is generally my goal), and have it make very little difference in the overall classroom result. The example here of enormous variation between two sections, identically treated by me as an instructor, really highlights this fact.

On this point, I found a 2013 paper from ETS by Edward H. Haertel -- principally about the unreliability of teacher VAM scores -- that summarizes several studies as finding that the difference in test scores attributable to teacher proficiency is only about 10% (see p. 5). That actually seems about right based on my recent experiences.

This got me to wondering: How much difference does the teacher make in these classes? And the honest answer is: not very much. To be humble about it, I could do everything humanly possible both inside and outside the classroom as a teacher, work at maximal effort all the time (and that is generally my goal), and have it make very little difference in the overall classroom result. The example here of enormous variation between two sections, identically treated by me as an instructor, really highlights this fact.

On this point, I found a 2013 paper from ETS by Edward H. Haertel -- principally about the unreliability of teacher VAM scores -- that summarizes several studies as finding that the difference in test scores attributable to teacher proficiency is only about 10% (see p. 5). That actually seems about right based on my recent experiences.

Reliability and Validity of Inferences About Teachers Based on Student Test Scores

2015-06-08

Why Technology Won't Fix Schools

Kentaro Toyama is a professor at U. Michigan, a fellow at MIT, and a former researcher for Microsoft. He's just written a book titled "Geek Heresy: Rescuing Social Change from the Cult of Technology" (although I'd quibble with the title in one way: practically all geeks I know consider the following to be obvious and common-sense). He writes:

And, oh, how much do I agree with the following!:

More at the Washington Post (link).

But no matter how good the design, and despite rigorous tests of impact, I have never seen technology systematically overcome the socio-economic divides that exist in education. Children who are behind need high-quality adult guidance more than anything else. Many people believe that technology “levels the playing field” of learning, but what I’ve discovered is that it does no such thing.

And, oh, how much do I agree with the following!:

... what I’ve arrived at is something I think of as technology’s Law of Amplification: Technology’s primary effect is to amplify human forces. In education, technologies amplify whatever pedagogical capacity is already there.

More at the Washington Post (link).

2015-06-01

Noam Chomsky on Corporate Colleges

Noam Chomsky speaks on issues of non-teaching administrators taking over America's colleges, the use of part-time and non-governing faculty, and related issues:

The university is probably the social institution in our society that comes closest to democratic worker control. Within a department, for example, it’s pretty normal for at least the tenured faculty to be able to determine a substantial amount of what their work is like: what they’re going to teach, when they’re going to teach, what the curriculum will be. And most of the decisions about the actual work that the faculty is doing are pretty much under tenured faculty control.More at Salon.com.

Now, of course, there is a higher level of administrators that you can’t overrule or control. The faculty can recommend somebody for tenure, let’s say, and be turned down by the deans, or the president, or even the trustees or legislators. It doesn’t happen all that often, but it can happen and it does. And that’s always a part of the background structure, which, although it always existed, was much less of a problem in the days when the administration was drawn from the faculty and in principle recallable.

Under representative systems, you have to have someone doing administrative work, but they should be recallable at some point under the authority of the people they administer. That’s less and less true. There are more and more professional administrators, layer after layer of them, with more and more positions being taken remote from the faculty controls.

2015-05-25

Online Courses Fail at Community Colleges

More evidence for one of the most uniformly-verified findings I've seen in education: online courses strike out for community college students. From a paper by researchers at U.C.-Davis, presented at the American Educational Research Association's conference in April:

“In every subject, students are doing better face-to-face,” said Cassandra Hart, one of the paper’s authors. “Other studies have found the same thing. There’s a strong body of evidence building up that students are not doing quite as well in online courses, at least as the courses are being designed now in the community college sector.”More at Alternet.org.

2015-05-18

Teaching Evolution in Kentucky

A professor discusses teaching evolution in Kentucky. "Every time a

student stomps out of my auditorium slamming the door on the way, I

can’t help but question my abilities." (Link.)

(Thanks to Jonathan Scott Miller for the link.)

(Thanks to Jonathan Scott Miller for the link.)

2015-05-11

On Definitions

From the MathBabe blog by poster EllipticCurve, here:

Mathematical definitions mean nothing until you actually use them in anger, i.e. to solve a problem...

2015-05-04

Quad Partners Buys Inside Higher Ed

From Education News in January:

Although there has been no public announcement made, Quad Partners, a New York private equity firm devoted to the for-profit college industry, recently gained a controlling stake in the education trade publication Inside Higher Ed (IHE). The publication routinely reports on for-profit colleges and surrounding policy disputes, and the publication is now listed among investments on the Quad Partners website.Read more here.

2015-04-27

ETS on Millennials

A fascinating report on international education and job-ready skills from the Educational Testing Service. Particularly so, as it almost directly impinges on committee work that I've been doing lately. Core findings:

See the online report here.

- While U.S. millennials have far higher degree certifications than prior generations, their literacy, numeracy, and use-of-technology skills are demonstrably lower.

- U.S. millennials rank 16th of 22 countries in literacy. They are 20th of 22 in numeracy. They are tied for last in technology-based problem solving.

- Numeracy for U.S. millennials has been dropping across all percentiles since at least 2003.

See the online report here.

2015-04-20

Causes of College Cost Inflation

From testimony at Ohio State (link):

- Decreased state funding

- Administrative bloat

- Cost of athletics

2015-04-13

Pupils Prefer Paper

You may have already seen this article on the work of Naomi S. Baron at American University: her studies show that for textbook-style reading and studying, young college students still prefer paper books over digital options. Why? Because of reading.

Read the article at the Washington Post.

In years of surveys, Baron asked students what they liked least about reading in print. Her favorite response: “It takes me longer because I read more carefully.”...

Another significant problem, especially for college students, is distraction. The lives of millennials are increasingly lived on screens. In her surveys, Baron writes that she found “jaw-dropping” results to the question of whether students were more likely to multitask in hard copy (1 percent) vs. reading on-screen (90 percent).

Read the article at the Washington Post.

2015-04-06

Academically Adrift Again

One more time, as we've pointed out here before (link), in this case from Jonathan Wai of Duke University: "the rank order of cognitive skills of various

majors and degree holders has remained remarkably constant for the last

seven decades", with Education majors perennially the very lowest of performers (closely followed by Business and the Social Sciences).

See Wai's article and charts here.

See Wai's article and charts here.

2015-03-30

Newtonian Weapons

The ponderous instrument of synthesis, so effective in Newton's hands, has never since been grasped by anyone who could use it for such purpose; and we gaze at it with admiring curiosity, as some gigantic implement of war, which stands idle among the memorials of ancient days, and makes us wonder what manner of man he was who could wield as a weapon what we can hardly lift as a burden.- William Whewell on Newton’s geometric proofs, 1847 (thanks to JWS for pointing this out to me).

2015-03-23

Average Woman's Height

A quick observation: statements like "the average woman's height is 64 inches" are almost always misinterpreted. Here's the main problem: people think that this is referencing an archetypal "average woman", when it's not.

The proper parsing is not "the (average woman's) height"... but it is "the average (woman's height)". See the difference? It's really a statement about the variable "woman's height", which has been measured many times, and then averaged. In short, it's not about an "average woman", but rather an "average height" (of women).

Of course, this misunderstanding is frequently intentionally mined for comedy. See yesterday's SMBC comic (as I write this), for example: here.

The proper parsing is not "the (average woman's) height"... but it is "the average (woman's height)". See the difference? It's really a statement about the variable "woman's height", which has been measured many times, and then averaged. In short, it's not about an "average woman", but rather an "average height" (of women).

Of course, this misunderstanding is frequently intentionally mined for comedy. See yesterday's SMBC comic (as I write this), for example: here.

2015-03-16

Identifying Proportions Proposal